Docker has revolutionized the way we develop, ship, and run applications by providing a consistent environment across various stages of development and production. This consistency is achieved through the use of multiple interconnected components, each playing a critical role in the Docker ecosystem. Let’s dive into these components to understand their functionalities and how they contribute to Docker’s powerful capabilities.

Docker Engine

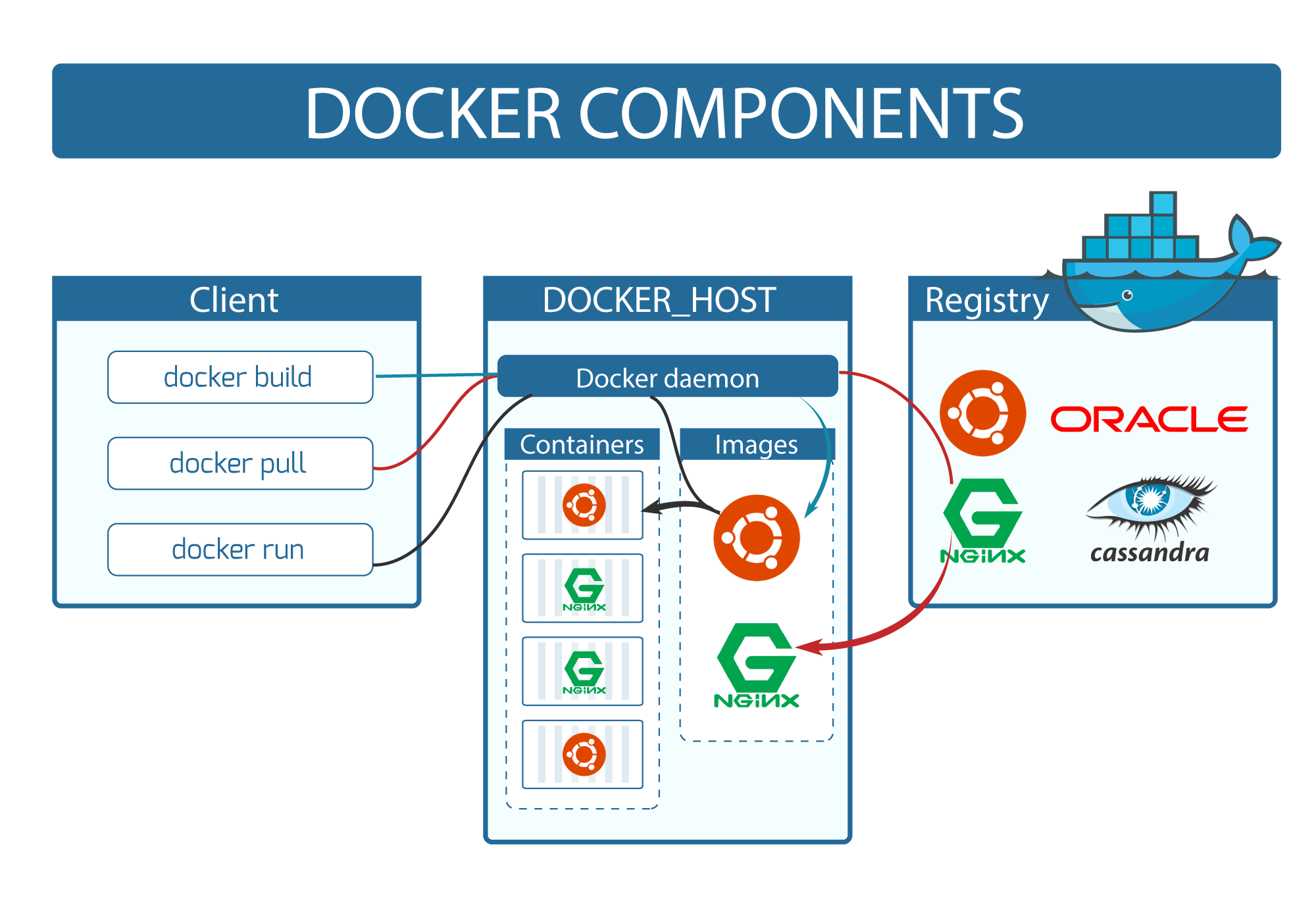

The Docker Engine is the core of the Docker system. It is a lightweight runtime and the client-server technology that facilitates the creation and management of Docker containers. The Docker Engine consists of three main components:

Server (Docker Daemon — dockerd): The Docker daemon is responsible for creating, running, and managing containers. It listens for API requests and manages Docker objects such as images, containers, networks, and volumes.

REST API: This interface allows communication between applications and the Docker daemon. It provides a standardized way for programs to interact with Docker and instruct the daemon on what actions to perform.

Command Line Interface (CLI): The Docker CLI is used by developers to issue commands to the Docker daemon. It translates user commands into API calls that are sent to the Docker daemon for execution.

Docker Client

The Docker Client is the primary interface through which users interact with Docker. When a user runs a Docker command (e.g., docker run, docker build), the client sends these commands to the Docker daemon using the Docker API. The client can communicate with multiple daemons, making it a versatile tool for managing containers across different environments.

Docker Hub

Docker Hub is the largest cloud-based repository for container images. It provides a platform for developers to share their images, collaborate on projects, and automate workflows. Docker Hub hosts both public and private repositories, allowing users to manage access to their images.

Docker Registries

Docker Registries are repositories where Docker images are stored and distributed. There are two main types of Docker registries:

Public Registry: The default public registry is Docker Hub, which hosts a vast collection of images available for public use. Docker Hub allows users to push and pull images, enabling easy sharing and collaboration.

Private Registry: Organizations can set up private registries to store proprietary images securely. This provides greater control over image distribution and access.

When you execute commands like docker pull or docker run, the required images are fetched from the specified registry. Conversely, the docker push command uploads images to a registry.

Docker Images

Docker Images are read-only templates used to create containers. Each image is built from a Dockerfile, which contains a series of instructions specifying the image’s content and configuration. Docker images are composed of multiple layers, with each layer representing a set of changes to the image’s filesystem. These layers make images lightweight and efficient to distribute.

Docker Containers

Docker Containers are executable instances of Docker images. They encapsulate an application and its dependencies, running in an isolated environment. Containers share the host system’s kernel but operate independently, ensuring that processes within one container do not affect others. This isolation, combined with the lightweight nature of containers, makes them ideal for deploying microservices and scalable applications.

Dockerfile

A Dockerfile is a script containing a set of instructions on how to build a Docker image. It defines the base image, required packages, environment variables, and commands to run. Dockerfiles enable reproducible builds, ensuring that the same Docker image can be recreated consistently.

Docker Volumes

Docker Volumes are used to persist data generated by and used within Docker containers. Unlike the ephemeral storage inside containers, volumes are stored on the host system and can be shared between containers. This makes them ideal for scenarios where data needs to survive container restarts or be accessible across multiple containers.

Docker Desktop

Docker Desktop is an application that provides an easy-to-use interface for creating and managing containers on Windows and MacOS. It includes Docker Engine, Docker CLI, Docker Compose, Kubernetes, and other tools necessary for container development. Docker Desktop streamlines the setup process, making it accessible for developers of all levels.

Docker Compose

Docker Compose is a tool used for defining and running multi-container Docker applications. It uses a YAML file to configure the application’s services, networks, and volumes. With Compose, you can define the services, networks, and volumes for your application in a single file, then spin up all the services with a single command.

Here’s a basic example of a Docker Compose YAML file:

version: '3'

services:

web:

image: nginx:latest

ports:

- "8080:80"

db:

image: mysql:5.7

environment:

MYSQL_ROOT_PASSWORD: password

In this example, we have two services defined: web and db. The web service uses the latest Nginx image and maps port 8080 on the host to port 80 in the container. The db service uses the MySQL 5.7 image and sets the MYSQL_ROOT_PASSWORD environment variable.

You can start the application defined in the docker-compose.yml file using the following command

docker-compose up

Docker Swarm

Docker Swarm is Docker’s native clustering and orchestration tool. It allows you to create and manage a cluster of Docker nodes, turning them into a single virtual Docker engine. Docker Swarm has two types of nodes: manager nodes and worker nodes.

Manager Nodes: Manager nodes handle cluster management tasks such as scheduling, orchestration, and managing the state of the cluster.

Worker Nodes: Worker nodes receive and execute tasks from the manager nodes.

You can initialize a Docker Swarm on a node using the following command:

docker swarm init --advertise-addr <MANAGER-IP>

This command initializes the current node as a manager node. To add worker nodes to the swarm, you can use the join command displayed after initializing the swarm on the manager node.

Once you have a Docker Swarm set up, you can deploy services to the swarm using Docker Compose files or the docker service command. Docker Swarm provides features for scaling, rolling updates, and load balancing across the cluster.

Docker Swarm is a powerful tool for managing and scaling containerized applications, providing high availability and fault tolerance.

Conclusion

Docker’s components work in harmony to provide a robust and flexible platform for containerized applications. From the core Docker Engine to the user-friendly Docker Desktop, each component plays a vital role in the Docker ecosystem. By understanding these components, developers can leverage Docker’s full potential to build, ship, and run applications more efficiently.

Thank you for taking the time to read my blog. Your feedback is immensely valuable to me. Please feel free to share your thoughts and suggestions.